BinPlay: A Binary Latent Autoencoder for Generative Replay Continual Learning

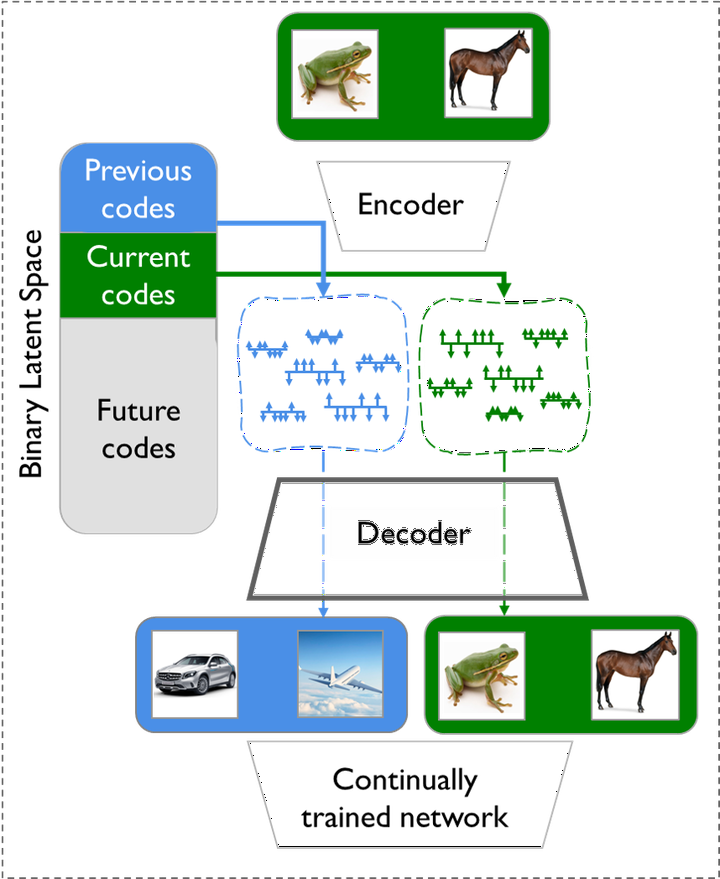

Overview of the BinPlay architecture for continual learning

Overview of the BinPlay architecture for continual learning

Abstract

We introduce a binary latent space autoencoder architecture to rehearse training samples for the continual learning of neural networks. The ability to extend the knowledge of a model with new data without forgetting previously learned samples is a fundamental requirement in continual learning. Existing solutions address it by either replaying past data from memory, which is unsustainable with growing training data, or by reconstructing past samples with generative models that are trained to generalize beyond training data and, hence, miss important details of individual samples. In this paper, we take the best of both worlds and introduce a novel generative rehearsal approach called BinPlay. Its main objective is to find a quality-preserving encoding of past samples into precomputed binary codes living in the autoencoder’s binary latent space. Since we parametrize the formula for precomputing the codes only on the chronological indices of the training samples, the autoencoder is able to compute the binary embeddings of rehearsed samples on the fly without the need to keep them in memory. Evaluation on three benchmark datasets shows up to a twofold accuracy improvement of BinPlay versus competing generative replay methods.