EgoNN: Egocentric Neural Network for Point Cloud Based 6DoF Relocalization at the City Scale

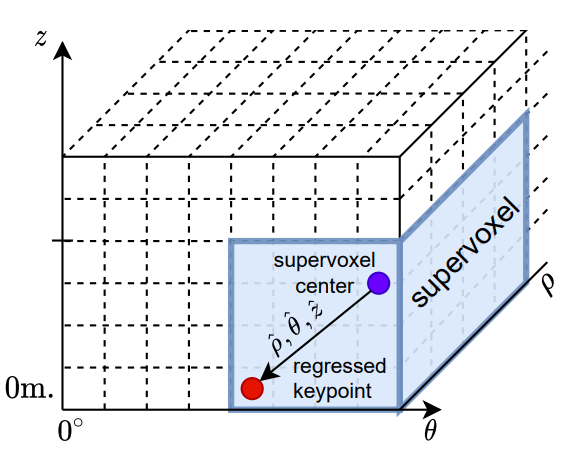

Illustration of the idea behind a keypoint position regressor. Cylindrical coordinates $(\hat{\rho}, \hat{\theta}, \hat{z})$ of one of keypoint relative to the supervoxel center are regressed in each non-empty supervoxel. Dashed lines indicate voxel boundaries.

Illustration of the idea behind a keypoint position regressor. Cylindrical coordinates $(\hat{\rho}, \hat{\theta}, \hat{z})$ of one of keypoint relative to the supervoxel center are regressed in each non-empty supervoxel. Dashed lines indicate voxel boundaries.

Abstract

The paper presents a deep neural network-based method for global and local descriptors extraction from a point cloud acquired by a rotating 3D LiDAR. The descriptors can be used for two-stage 6DoF relocalization. First, a course position is retrieved by finding candidates with the closest global descriptor in the database of geo-tagged point clouds. Then, the 6DoF pose between a query point cloud and a database point cloud is estimated by matching local descriptors and using a robust estimator such as RANSAC. Our method has a simple, fully convolutional architecture based on a sparse voxelized representation. It can efficiently extract a global descriptor and a set of keypoints with local descriptors from large point clouds with tens of thousand points. Our code and pretrained models are publicly available on the project website.