SuperNCN: Neighbourhood consensus network for robust outdoor scenes matching

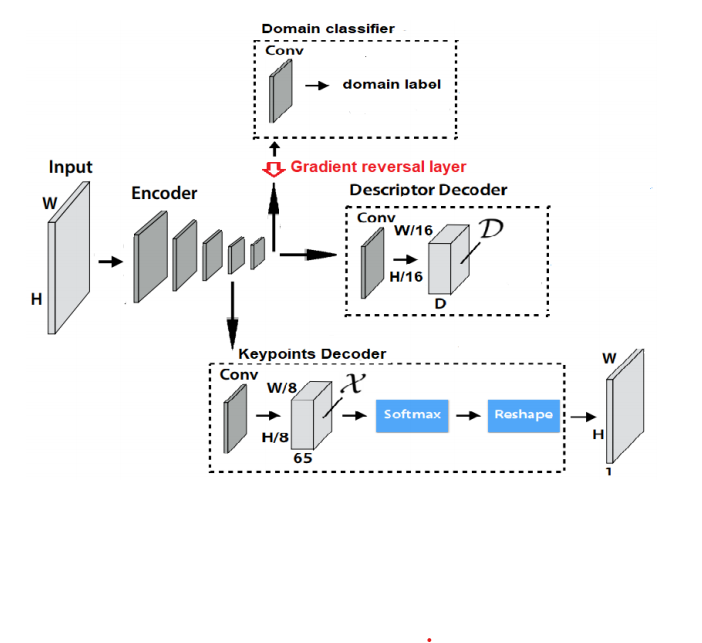

Architecture of one twin module of the Siamese network used to train keypoint detector and domain invariant descriptor

Architecture of one twin module of the Siamese network used to train keypoint detector and domain invariant descriptor

Abstract

In this paper, we present a framework for computing dense keypoint correspondences between images under strong scene appearance changes. Traditional methods, based on nearest neighbour search in the feature descriptor space, perform poorly when environmental conditions vary, e.g. when images are taken at different times of the day or seasons. Our method improves finding keypoint correspondences in such difficult conditions. First, we use Neighbourhood Consensus Networks to build spatially consistent matching grid between two images at a coarse scale. Then, we apply Superpoint-like corner detector to achieve pixel-level accuracy. Both parts use features learned with domain adaptation to increase robustness against strong scene appearance variations. The framework has been tested on a RobotCar Seasons dataset, proving large improvement on pose estimation task under challenging environmental conditions.