Siamese generative adversarial privatizer for biometric data

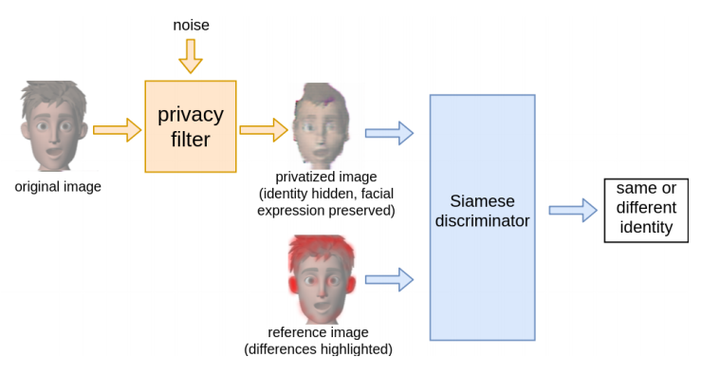

Basic functionality of the proposed Siamese Generative Adversarial Privatizer

Basic functionality of the proposed Siamese Generative Adversarial Privatizer

Abstract

State-of-the-art machine learning algorithms can be fooled by carefully crafted adversarial examples. As such, adversarial examples present a concrete problem in AI safety. In this work we turn the tables and ask the following question: can we harness the power of adversarial examples to prevent malicious adversaries from learning identifying information from data while allowing non-malicious entities to benefit from the utility of the same data? For instance, can we use adversarial examples to anonymize biometric dataset of faces while retaining usefulness of this data for other purposes, such as emotion recognition? To address this question, we propose a simple yet effective method, called Siamese Generative Adversarial Privatizer (SGAP), that exploits the properties of a Siamese neural network to find discriminative features that convey identifying information. When coupled with a generative model, our approach is able to correctly locate and disguise identifying information, while minimally reducing the utility of the privatized dataset. Extensive evaluation on a biometric dataset of fingerprints and cartoon faces confirms usefulness of our simple yet effective method.