Non-Gaussian Gaussian Processes for Few-Shot Regression

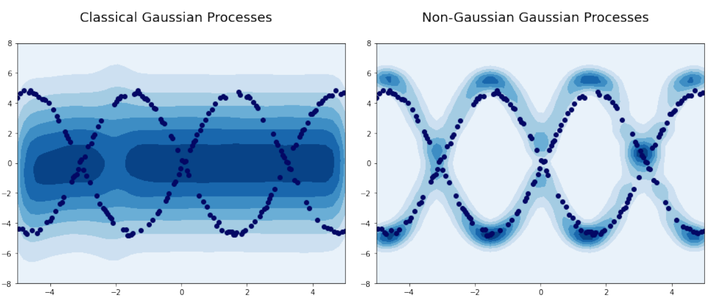

Results of Deep Kernels with classical GP (left) and NGGP (right). The one-dimensional samples were generated randomly from $\sin(x)$ and $−\sin(x)$ functions with additional noise. NGGP, compared to GP, does not have an assumption of Gaussian prior, which allows for modeling a multi-modal distribution.

Results of Deep Kernels with classical GP (left) and NGGP (right). The one-dimensional samples were generated randomly from $\sin(x)$ and $−\sin(x)$ functions with additional noise. NGGP, compared to GP, does not have an assumption of Gaussian prior, which allows for modeling a multi-modal distribution.

Abstract

Gaussian Processes (GPs) have been widely used in machine learning to model distributions over functions, with applications including multi-modal regression, time-series prediction, and few-shot learning. GPs are particularly useful in the last application since they rely on Normal distributions and enable closed-form computation of the posterior probability function. Unfortunately, because the resulting posterior is not flexible enough to capture complex distributions, GPs assume high similarity between subsequent tasks - a requirement rarely met in real-world conditions. In this work, we address this limitation by leveraging the flexibility of Normalizing Flows to modulate the posterior predictive distribution of the GP. This makes the GP posterior locally non-Gaussian, therefore we name our method Non-Gaussian Gaussian Processes (NGGPs). More precisely, we propose an invertible ODE-based mapping that operates on each component of the random variable vectors and shares the parameters across all of them. We empirically tested the flexibility of NGGPs on various few-shot learning regression datasets, showing that the mapping can incorporate context embedding information to model different noise levels for periodic functions. As a result, our method shares the structure of the problem between subsequent tasks, but the contextualization allows for adaptation to dissimilarities. NGGPs outperform the competing state-of-the-art approaches on a diversified set of benchmarks and applications.