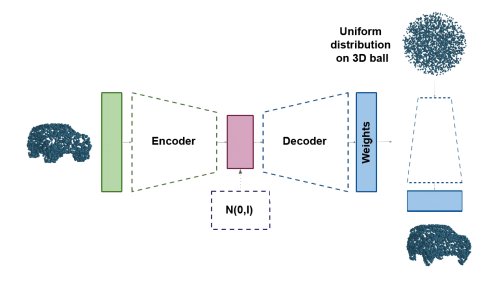

Pipeline of our HyperNetwork approach

Pipeline of our HyperNetwork approach

Abstract

In this work, we propose a novel method for generating 3D point clouds that leverage properties of hyper networks. Contrary to the existing methods that learn only the representation of a 3D object, our approach simultaneously finds a representation of the object and its 3D surface. The main idea of our HyperCloud method is to build a hyper network that returns weights of a particular neural network (target network) trained to map points from a uniform unit ball distribution into a 3D shape. As a consequence, a particular 3D shape can be generated using point-by-point sampling from the assumed prior distribution and transforming sampled points with the target network. Since the hyper network is based on an auto-encoder architecture trained to reconstruct realistic 3D shapes, the target network weights can be considered a parametrization of the surface of a 3D shape, and not a standard representation of point cloud usually returned by competitive approaches. The proposed architecture allows finding mesh-based representation of 3D objects in a generative manner while providing point clouds en pair in quality with the state-of-the-art methods.